Rust and microservices

Once Linus Torvalds blesses something, that thing is destined to achieve world domination - exemplified by Linux and Git. Now Rust has received the royal nod - it has been accepted as another language for Linux kernel development. Efforts are underway to rewrite everything in Rust, starting from the ls utility and other everyday command line tools to the whole graphical desktop environment. In this article I discuss similarities and differences between Rust and other mainstream languages. I implement a simple web service in Python, Javascript, C++, C#, Java, and Rust, and race the implementations against each other to compare their performance.

This started as an exploration of Rust, by a neophyte, and developed into a benchmark of asynchronous web services in different languages.

I started researching Rust to understand what causes so much enthusiasm about the language among experienced software developers and novices alike. The language designers at Mozilla were motivated by the desire to work with a performant language that is safer and simpler than C++. I have written C++ code for decades, seen the evolution of the language, was frustrated by its many foot-guns [1], but also delighted by the emerging simplicity of the code enabled by the most recent iterations of the C++ standard [2]. I took Rust for a spin and summarized my findings here. I compared the language features, tools, and the software performance to the languages I am most familiar with: C++, C#, Java, Python, and Javascript.

Rust features

Rust is a strongly typed language, like many other mainstream languages, such as C++, C#, Java. Strong typing is practically a must for writing performant software. Languages with duck-typing (such as Python and Javascript) win on ease of prototyping, but lose on software performance and on ability to scale software projects to many thousands of lines of code. For example, in Python, if you successfully imported a module that contains these lines:

try: cursor = get_db_cursor() except SomeException: logger.exception(f"Do not have database access in {__name__}") raise

there is no guarantee that the variable logger is defined, or that the

logger object has the exception method. You can only be reasonably

sure with 100% test coverage, which is very difficult to achieve, as the situation

in the exception handler is typically exceptional, and therefore hard to get into.

Strong typing is also instrumental in letting your code editor auto-complete as you type.

Since Rust is a strongly typed language, the editor helpfully suggests the attributes and methods that

you can use with the variable you entered. The access to the documentation

(What does this method do? Which input goes first and which goes second?)

is instantaneous. The strong typing does not preclude having some variables duck-typed.

Rust has the std::any::Any type for that, in C++ that would be std::any,

and in C# one can choose between plain object and dynamic.

Another pillar of Rust is the value safety. I put in this bucket the const correctness and the absence of null pointers.

The former means that each variable is by default declared as constant and the compiler will prevent you

from accidentally changing it. The latter is possible due to prolific use of discriminated unions and the

compiler forcing the programmer to deal with all members of the union. Similar facilities are present in Java, C#,

and C++. In C++, they are expressed with std::variant and std::optional. Rust uses

such facilities where many other languages would employ null pointers (or null objects) and exceptions.

Similar to C++, Rust uses deterministic object life-time rules and smart pointers for resource management, unlike the garbage collection machinery of C#/Java/Python/Javascript. A novel compile time mechanism, called borrow checker, was added to avoid "use after free" errors.

In addition, bounds checks are performed automatically at run time on each array access.

To break the safety rules enforced by the compiler, one has to mark the code block using the unsafe keyword, showing the code reviewers where to pay special attention.

Another feature of the language, according to its cheerleaders, is simplicity. Simplicity is often in the eyes of the beholder. Looks like Rust avoids the complexity of C++'s template metaprogramming, but at the cost of using powerful (and necessarily complex) preprocessor.

Just like C++/C#/Java, Rust supports parameterized types. Parameter constraints have been supported from the get-go,

while it took decades for C++ to add such support

beyond the enable_if hack.

Call me superficial, but what I liked the most in Rust was the supporting tools. The package repository is a huge convenience, and so are the standard code and documentation building tools. This is quite similar to the experience in most modern languages, but not in C++. Using a C++ library, such as Boost, even in this day and age, requires manually downloading, unpacking, and building it, and the build system (bjam or CMake) is non-standard and unsightly. Some C++ package repositories are available, but the eco-system has become fragmented long before becoming standard practice.

Whose lunch does Rust want to eat?

The most likely victims are C and C++. The head Linux honcho gave a go-ahead to using Rust in Linux device drivers - the privilege that has always been denied to C++. One of the foremost reasons for the Linus's dislike of C++ is the use of exceptions. Notably Rust does not support exceptions, unlike C++, C#, Java, Python, Javascript and many other languages that are younger than C.

The combination of high performance and high convenience in C++ is often described as "you do not pay for the features you do not use", and that is an excellent trait to find in a programming language. Unfortunately, the usage of exceptions is not one of those features. The programmer does pay for existence of exceptions, even if her code does not use them. One type of the cost is that the reasoning about the code is more difficult - every function call may return a value of the declared type, but an exception is always another possibility - a very different outcome that leads to a very different code path. There is also a run time cost, as the compiler has to take that other code path into account. Even when no exception is thrown, there is a cost - the objects that need to be destroyed in case of an exception need to be registered and deregistered. There is also a cost for the compiler writer - the generated code is now more entangled, so optimizations are more difficult to perform.

Microservice speed test

How performant is the software implemented in Rust compared to other languages? I expect a typical Rust app to run faster than the same app implemented in C# and Java due to the cost of bytecode interpretation in the latter languages. Python and Javascript must be even slower because they have an extra cost of duck typing piled on top of that. However, the better performance of Rust over C++ software is not assured. If it does perform better, that difference gives some idea about the cost of exception handling and the burden of the language complexity on the compiler and the standard library implementer in C++ compared to Rust.

To leave the armchair philosophising territory and enter the strong anecdotal evidence land, I have implemented a toy microservice in Rust and raced it against the equivalent microservices written in other languages [3]. In the supplemental git repository you can find the source code for the web services I implemented for this performance competition.

I chose to implement an asynchronous [4] web service. Asynchronous web servers solve the so-called 10k problem. It means supporting more than 10,000 simultaneous connections to the server. In other words, asynchronous web services can handle 10k requests in progress. For synchronous servers, that requires more than 10k threads, which is not something that regular operating systems on regular hardware are designed [5] to support.

Modern languages implement the asynchronicity using coroutines with async/await keywords [6].

The application starts an event loop, which runs coroutines on a smallish number of threads,

or even executes all of them in a single thread. Programming coroutines can be simpler than multithreaded

programming, because a thread can preempt another thread at any point (even in the middle of a programming

statement) while coroutines are more co-operative, and all the preemption points are marked with the

await keyword. You will not see many of those in the microservices that we are racing, because I wanted

the simplest possible microservice [7] - I do not want my server speed comparison to be obscured by the speed

of dependent services (eg, database accesses) and by the quality of various libraries (eg, JSON serializers).

Here is an implementation of a toy adder service in Rust:

In line 6, the warp::path! macro declares our service end-point: the URL path starts with add and is

followed by two integers (i64), introduced by slashes. Thus we expect to handle a GET request with the path

/add/347248/293898 and expect a plain text response that states what the sum is: 347248 + 293898 = 641146.

Note how the macro can transform a stream of tokens that would absolutely stump the C/C++ preprocessor.

In line 7 we state what the handler of that endpoint does: it takes the two arguments and forms a string that states

what their sum is. The format! is also a macro, and it had to be employed because Rust functions do not

accept variable number of arguments and do not allow overloads based on the argument types - another choice

that pleases Linus Torvalds. The subsequent lines declare the service on the localhost (127.0.0.1) port 3030

and hand the service to the event loop.

The full source code for Rust and other languages, as well as the code that I used for load-testing the implementations of this service are available in my git repository. It is a joy to see how little code needs to be written to implement a web service in this day and age. The C++ code is a little more voluminous than others, but do not blame C++ too much for it. The designers of the library that I have chosen in C++ (Boost::beast) aimed at a quite low-level framework, good for implementing both synchronous and asynchronous web services.

Speed test results

The performance measurements were done on an XPS-13 Dell laptop running Ubuntu 20.04. Some hardware info:

12 Intel Core i7-10710U CPU @ 1.1GHz

16 GB RAM

the tests do not perform any storage IO in the steady state, so the storage information is irrelevant

The tests were performed for the microservice implemented in the following web frameworks:

Tokio/Warp (Rust)

Boost::beast (C++)

FastAPI under Python 3.8

Node Express (Javascript)

Bayou (Java)

ASP.NET (C#)

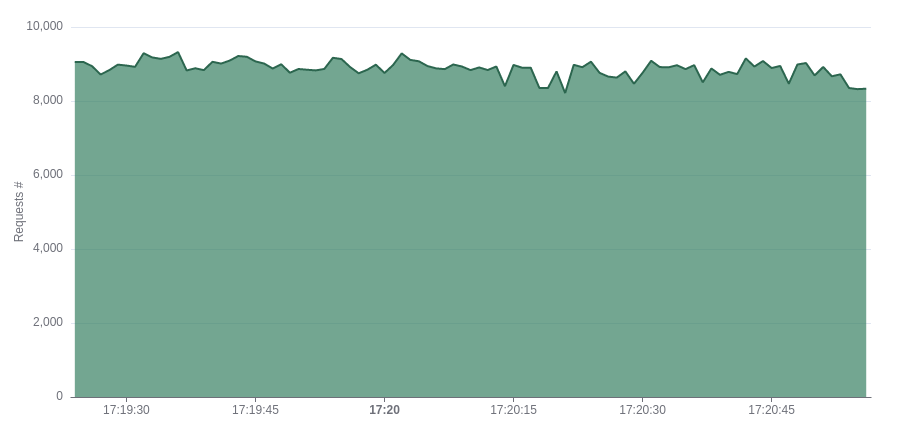

Each microservice was bombarded with requests from 12 web clients. The following figure shows the observed performance for the microservice implemented in Python.

Performance of the FastAPI microservice under Python 3.8. The graph shows the number of requests per second versus time. After several minutes of that load, my laptop's fan turns on and the performance drops about 20%. The eventual performance drop is due to thermal protection kicking in and happens in all other tests as well. It is a feature of the particular hardware I am testing on and not a feature of the tested software.

The comparative performance and footprint of each microservice is summarized in the following table. Follow the links in the last column to see the report for the specific microservice implementation.

VIRT |

RES |

SHR |

%CPU |

Rate |

Implementation |

|---|---|---|---|---|---|

817,708 |

3,244 |

2,940 |

155 |

75k |

|

8,272 |

4,668 |

4,180 |

100 |

47k |

|

150,040 |

37,876 |

16,112 |

100 |

9k |

|

152,896 |

41,496 |

17,348 |

100 |

11k |

|

619,840 |

77,376 |

30,088 |

106 |

10k |

|

6,450,576 |

134,620 |

27,484 |

100 |

59k |

|

8,438,220 |

270,052 |

28,140 |

234 |

69k |

|

263.1g |

253,468 |

64,360 |

328 |

62k |

As you can see in the table, Rust is the king of the performance roost, but not if you consider

its performance per one CPU cycle. I was not able to restrict the Rust microservice to just one CPU

so it consumed 155% of a CPU core. When reduced to just one core, the Rust microservice can handle

75k/1.55 = 48k requests per second. That is the same performance as the performance of the microservice

implemented in C++, and surprisingly less than the performance of the Bayou (Java) microservice -

59k requests per second. That observarion may move you towards implementing asynchronous web services in Java

from now on, but be aware that Java does not natively support async/await keywords, so the

ecosystem for asynchronous programming is not great in Java. The Bayou framework uses futures and

callbacks to implement the asynchronicity.

Another thing to notice is that the Rust and C++ microservices consume much smaller amount of memory, which is important in today's world, where microservices are packed into containers and placed into any cloud machine that has enough resources for them. Thus Rust and C++ microservices can be inserted into various micro-corners of the cloud where Python and Node microservices would not fit, and C# or Java web services would not deserve the prefix "micro".